There can be no talk of “outcomes over outputs” until teams stop believing that outputs are scarce. The path to do that lies not through automating outputs, but through using UX methods to find the bottlenecks in your process.

This is part of an article series (starting here) exploring how every participant in the software design process can apply design decision-making methods to the way we organize teams, plan work, and build products, rather than just to the products themselves. I hope to make these tools useful and relevant to readers — if what I am describing doesn’t fit your experience, don’t hesitate to drop me a line.

“Outcomes over outputs” is such a common topic in transformation efforts that you would be forgiven for thinking the idea is much younger than its venerable 26 years. But despite spending the better part of three decades adopting intervention logic, product development seems to be no closer to eradicating output-first thinking.

Even the product leaders ostensibly advocating for outcomes in the first place reveal that their focus is squarely on outputs when they talk about their strategy. Nowadays, it’s about using generative AI approaches to scaling: generating ideas and putting them into production faster than ever before.

This strategy only makes sense if these leaders believe that the biggest obstacle to their orgs’ ability to scale is a scarcity of outputs. And as we remember from Econ 101, scarcity creates value. Is it any surprise then that their orgs value outputs above all else?

Except this value is illusory. Outputs were never scarce. Ideation and delivery were solved problems long before even GPT-1.

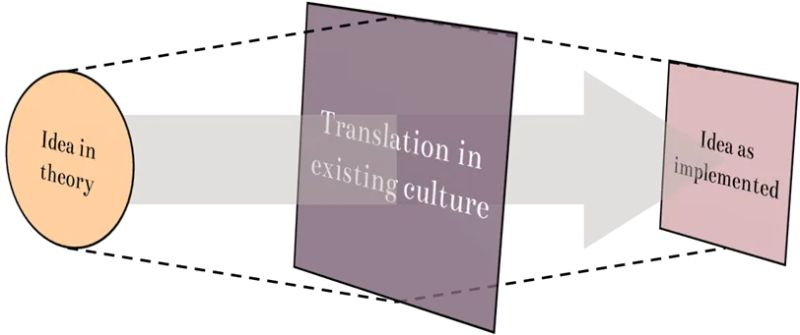

The efficacy of any intervention is modulated by the system already in place. This idea from Charles Lambdin is going to help us along the way.

The most likely problem with your org isn’t that it can’t produce outputs — it’s that it keeps forgetting about them. To plug that leak, we need to consult the science of talking to systems: user research.

The mystery of the missing outputs

There is no such thing as an original idea. — Mark Twain

The myths of “builders” and “visionaries” resonate very strongly with the STEM crowd. There’s no use in standing around talking when we could simply be having original ideas and then immediately iterating in code until we find product-market fit. When the projects keep failing real builders just try again, while users anxiously wait for us to solve all their problems.

In other words, the “builder” culture is a culture of waste. Fortunately, we now have a way to recycle all those fermented ideas and derelict codebases: training sets for large language models.

When you ask GPT-4 or Claude to give you a set of ideas or a feature implementation, it doesn’t sift through the collective knowledge of humankind to draw conclusions about what you should do. Rather, it produces the most probable reply according to its corpus. Anything an LLM could tell you, a salaried human in your company has statistically already thought of it. Any code it writes is the most average solution to the problem posed to it (if it works).

The main difference is that the AI does this very quickly. And if you work in a company that ships features as quickly as teams can come up with them, that might be useful for you. But in most companies, the AI’s outputs will gather dust on the shelf right next to the human ones, and relying on AI to scale your outputs just results in fuller shelves and more dust.

The trouble with the AI’s suggestions is that you don’t work at a statistically average company. The path ideas take between conception and production will be unique. The bottleneck to consistent outputs will be squishy and human — and you will need a human approach to find it, an approach that can learn without waste.

Foraging for ideas

Only a fool learns from his own mistakes. The wise man learns from the mistakes of others. — Otto von Bismarck

User research gets a bad rap in product circles. The complaint that “research slows us down” is thoroughly debunked about once a week, but far more insidious is the assumption that researchers are not necessary because a PM should already know what users need.

But the value of a user researcher is not having someone go talk to people while product managers “do the real work” (updating Jira). The value is in the lens through which a user researcher looks at the world. And that lens could not be more different from a PM’s.

Product development methods focus on potential futures. We talk about desired states, quarterly roadmaps, and uncertainty margins on our bets and experiments. Software delivery teams organize around shipping first, under the assumption that after shipping they will be in a better place to “measure” and “learn” about how users engage with what they built. They “move fast and break things” and ask for forgiveness afterwards rather than permission beforehand.

Our assumptions don’t always match reality.

User research, on the other hand, looks at the experienced past. It’s an axiom of the profession that research participants are unreliable at predicting future behavior; their past behavior is where we look for insights. While our research objective is to discover desired future states, our interview questions focus on what has already happened: how have you…? How did you…? The last time this happened, what did you…?

This approach is powerful because it engages with lived reality — not generic, idealized, or textbook practices but the truth of how people cope with the messy and often confusing tangle of competing demands on their time and attention.

And rather than casting users in the role of passive damsels in distress, it reveals the dizzying array of solutions and stopgaps that users — experts on their own experience — create. In fact, one of the more surefire way of identifying durable needs is that users everywhere will be trying to solve the problem on their own.

One of the best ways to understand a problem is to look at the “life hacks” your users solve it with today.

We can apply this lens to our product practice with one simple question.

What have we already tried?

My evergreen response to so many questions is: “What have you tried?” — Tatiana Mac

Through tools that engage effectively with the past, user research leverages the existing experience of people who have already tried to solve the same problem we are solving. Instead of treating “ideas” as something only we can come up with, user researchers open a book that’s already been written by dozens of co-authors and copy their notes.

The same tools let us access an equally significant body of evidence within our own organizations: what other teams have tried to build before us, and where their attempts fell short.

And let’s face it: it’s extremely likely that what you’re working on has already been tried before. You are not the first one to come up with “users want a dashboard” or “we should have a Reels feature.” But few teams document their mistakes and even fewer teams advertise them, so without a bit of digging you won’t know that your idea has been tried and failed before — and you certainly won’t know why.

A “vertical slice” of a product is not just made through the width and breadth of use cases, but through your team’s entire design and development process.

Orgs lie to themselves with roadmaps all the time, but the track record of what was actually shipped always tells the truth. Your process is likely still broken, in the same way that it was broken for those who came before you, and rather than burning political capital on finding out what it is the hard way, you can choose the easy way. Instead of hoping that this time the Best Practices approach will surely work, you can fix the underlying conditions that have been causing it to fail.

Stop shoving more outputs through the process in the hope that one of them will make it through, and start widening the bottleneck.

“What have we already tried?” is the most powerful product question you can ask was originally published in UX Collective on Medium, where people are continuing the conversation by highlighting and responding to this story.