Sharing insights into the user’s experience can help us show why content design matters

At Parent Talk, Action for Children’s online parenting support service, we publish advice for parents and carers on a wide range of topics. We make sure we prioritise the issues our users need support with through an evidence-led approach to content.

But you never really know how well a service or a piece of content is going to do until it’s out in the world. Because of this, we track our impact in a few different ways. This involves gathering data to check whether our service is meeting people’s needs. More recently, we’ve realised it’s also a way for us to demonstrate the difference that evidence-based content design is making.

This is something we’re able to share within the organisation, with funders and other stakeholders to make the case for the investment needed to help more parents and carers. It also gives us a remit to keep working on improvements where we know they’re needed, because we can see that our work can make a very real difference to people in need of support.

Collecting feedback from users over time

We use the tool Askem to collect feedback on whether people found our articles useful or not. This gives us:

information on whether we’re answering the questions of parents and carers well enoughspecific, actionable feedback on what to improvedata on user satisfaction over time

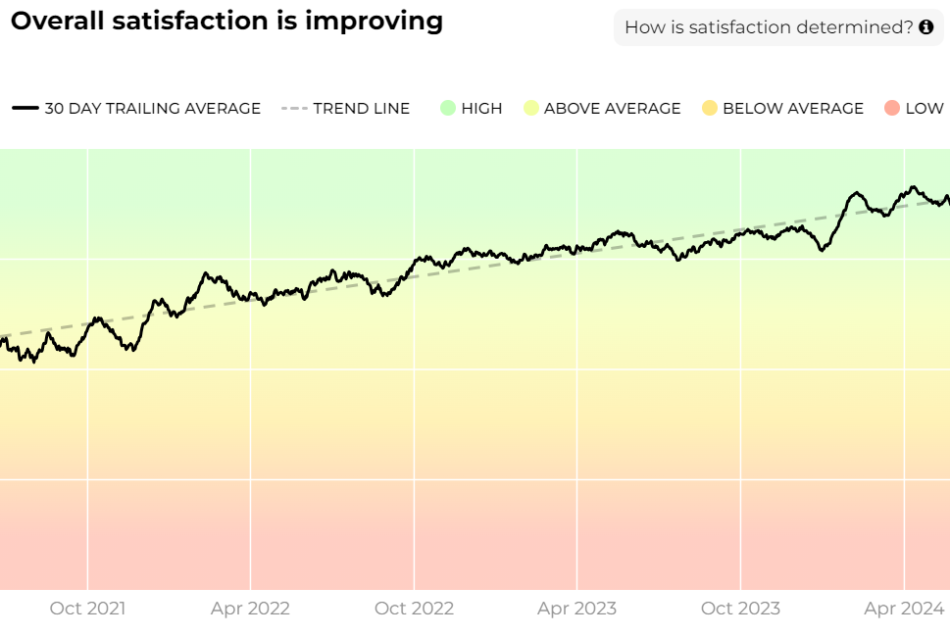

Since we started collecting user feedback on our content through the website in 2021 and making changes as a result, we’ve seen an increase in overall satisfaction.

Graph from Askem feedback tool showing increased user satisfaction for content over time

We know there is more to do (there is always more to do!) but this helps to illustrate how taking a data-led, user-centred approach to creating and improving content helps us better meet people’s needs. It also gives us motivation to keep doing what we’re doing.

We also use the tool to track the impact of individual projects, such as when we’re testing new content formats.

If and how people are finding our advice

Our service can only support parents and carers if they can find us in the first place. But Parent Talk doesn’t have a big advertising budget, so we need to work that bit harder to let people know we can offer support. One of the ways we do this is by using the language they’re using to find help online.

We use data from search listening to understand what people need help with, and what words they’re using to describe their problem. This has allowed us to make sure our content is:

addressing what people need help with (rather than what we think we should write about, based on our assumptions)easier to understand, because we’re using language people recogniseeasier for people to find on search engines like Google, because we know what they’re searching for

By making our content easier for people to find online, we’re helping them solve their problems by offering access to advice from experienced parenting coaches. From a business impact perspective, this has also saved us money and resulted in an increase in service reach.

In the year 2020/21, only 8% of our visitors found us through organic search — the majority found us through paid advertising. In 2023/24, 73% of our traffic came from organic search. This shows the impact of our content design approach on our services, both for parents and carers and the charity.*

*Google’s latest AI developments may be changing how we need to approach SEO, but for now putting the user first still has a positive effect on search results. We’re monitoring the situation so that we’re able to make changes if they’re needed.

Testing with our users

Testing our service and content with our users helps us identify opportunities for improvement and information gaps. But it also shows us what we’re doing right. For example, when we tested our content and service with a range of parents and carers to understand if it was inclusive, they told us it:

felt relevant, reassuring and realisticmade them feel seen and respected as a parenthelped them feel that others were experiencing similar problemsoffered useful coping strategies

We were able to share this with the charity to illustrate what we were doing well. We also heard specific feedback on what wording we needed to improve, and what parts of the service made people felt less sure whether it was intended for them. This meant that we could plan for improving the inclusivity of the service, which was the aim of the testing.

In terms of measuring impact, having a benchmark through user testing for where we are and where we want to go helps us deepen our own understanding of how well we’re supporting people. It helps us think about how we can do things more effectively in the next few years.

Some of the tests we’ve done with users to understand how well we’re supporting people include:

observing how people use the service and what they find easy or difficultasking people to highlight content that they find helpful or unhelpfulgiving users a selection of description cards to prompt discussions about their initial reactions to the serviceusing a screenreader to navigate the service, to understand how accessible it is to blind or partially sighted usersgiving people tasks to complete on new website structures, to understand which route they would take to find the information

We’re also planning testing of our chat function to explore how people feel about the content they get when they start using this part of the service.

Spotting gaps in our data and barriers in the service

We’re a small team working with a small budget, which means we can’t always improve the service as soon as we spot an opportunity. Nothing is ignored, but we have to prioritise changes and make sure we’re logging all potential issues.

Working out what we don’t know, or what we’re not doing so well, helps us see where we could be having a negative impact instead of a positive one. This is important when your service is used by people needing support. It helps us plan the most urgent development work and helps us pitch for funding to improve the service.

For example, we might identify:

a gap in data, which affects our understanding of our user needsan issue in how well people can access the website or chatwording that makes it harder for someone to understand our advice

We recently used a tool from the Defra Accessibility Team to estimate how many disabled users could be using the Parent Talk website. This helped us to understand, and have discussions about, how disabled people may be struggling to access our service and the impact this could have on them. This led to screenreader testing, currently in its first phase, which should result in a better experience for our users.

If we’re willing to talk about the gaps and issues with a service, we can have honest conversations with the charity and funders. We can start to work out if content design can help to solve problems and see how we might have more positive impact. Communicating potential negative impact of allowing the status quo to continue is a powerful tool for change. And as long as we have measurable goals, we’ll be able to see the difference our work can make.

Showing, not telling

While we’re getting better at sharing the impact of our content and service design work, we know there’s more we could be doing.

We’re looking at taking inspiration from Parent Talk’s chat function, where service visits have been helping people grasp the impact of the support offered in this way. At these service visits, been able to show the organisation and funders impact through:

transcripts of conversations on the chat service so they can see the issues people are facingfeedback from users talking about how the chat service helped themcase studies of how we’ve helped families tackle a challenge

Giving others access to the words of our users is changing the way people understand the service, in a way that talking about it never has. The next challenge for us as a team is how we do something similar for content and service design. We’re working on ways to share the user’s voice with others, and will be documenting the results.

Ruth Stokes is a Senior Content Designer at the UK charity Action for Children, working on the Parent Talk service

Tracking the impact of our content design work was originally published in UX Collective on Medium, where people are continuing the conversation by highlighting and responding to this story.