Facial expressions don’t always reveal true emotions — but that hasn’t stopped AI from trying to analyze them anyway.

“How emotion recognition software strengthens dictatorships and threatens democracies”, source

Imagine walking into a classroom where AI-powered cameras track students’ facial expressions, rating their attentiveness and engagement in real time. Or picture a security checkpoint where an AI system silently analyzes your micro-expressions and subtle head movements, assessing whether you’re a potential threat — without you even knowing. What might sound like science fiction is already a reality — emotion recognition AI is actively turning our emotions into data points, analyzing, categorizing, and interpreting them through machine algorithms.

Though not yet widespread, emotion recognition technology is already being developed and deployed by both governments and private companies. As its use expands, it raises important discussions about accuracy, ethics, and the role of AI in analyzing human emotions.

“The global emotion detection and recognition market size was valued at $21.7 billion in 2021 and is projected to reach $136.2 billion by 2031” — Allied Market Research

But here’s the problem: these systems don’t actually work the way they claim to — and you’re lucky if they even tell you explicitly that you’re being analyzed.

Despite their growing adoption, emotion recognition technologies remain highly debated. These systems analyze facial expressions, vocal tones, and physiological signals to infer emotions, yet human emotions are complex, influenced by context, culture, and individual differences. While AI can detect patterns in outward expressions, accurately interpreting emotions remains a significant challenge, raising questions about reliability, privacy, and ethical considerations.

Recognizing these risks, the European Union’s AI Act takes a bold stance: it prohibits the use of emotion recognition AI in most scenarios. Regulators argue that the potential for discrimination, mass surveillance, and human rights violations far outweighs any supposed benefits.

So, why exactly is emotion recognition AI so problematic? And should there be any exceptions for its use? Let’s dive into the science, real-world examples, and legal justifications behind this landmark decision.

Your face doesn’t tell the whole story — but AI thinks it does

In theory, emotion recognition AI promises something revolutionary: the ability to “read” human emotions from facial expressions, voice tones, and physiological signals. The idea is simple — if humans can intuitively recognize emotions in others, why not train AI to do the same?

But there’s a problem: humans don’t express emotions in a universal, one-size-fits-all way. And yet, most emotion recognition systems operate under that flawed assumption, reducing complex human experiences into a set of predefined labels.

How emotion recognition AI works — and why it’s flawed

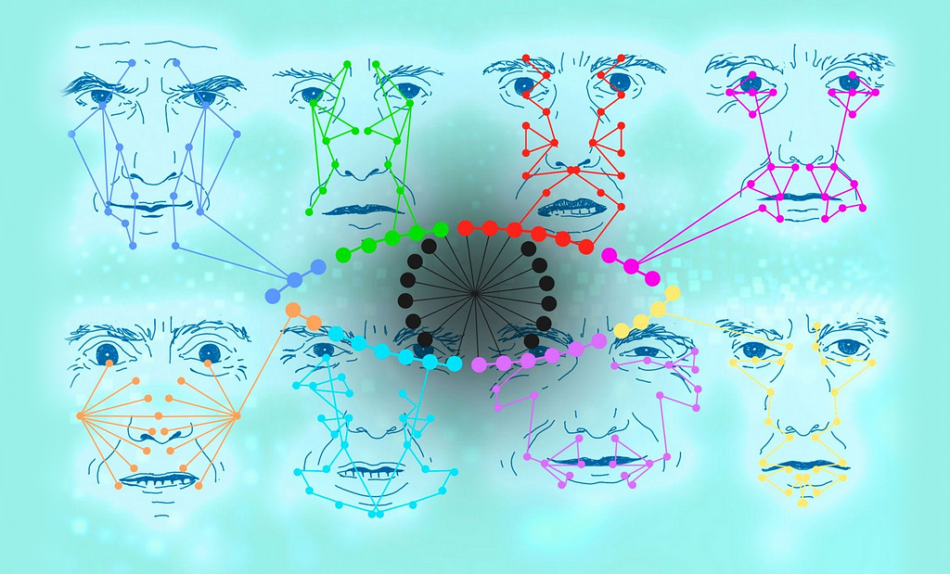

Emotion recognition systems use machine learning models trained on large datasets of human expressions, speech, and biometric data. These systems primarily rely on facial expressions — AI scans micro-expressions (tiny, involuntary facial movements) and classifies emotions.

Most of these systems, including, for example MorphCast, are built on Paul Ekman’s “Basic Emotions” theory, which suggests that all humans express six universal emotions (happiness, sadness, anger, fear, disgust, surprise) in the same way.

Actor Tim Roth portraying facial expressions and their explanation, source

But is it truly set in stone that these six universal emotions are expressed through identical facial movements across all people?

Dr. Lisa Feldman Barrett (Harvard neuroscientist & psychologist), in her book How Emotions Are Made (2017), argues that emotions are not biologically hardwired or universal, but rather constructed by the brain based on context, culture, and past experiences. Her 2019 meta-study, which reviewed over 1,000 studies, found no consistent evidence for universal facial expressions tied to emotions.Dr. James A. Russell, who developed the Circumplex Model of Affect, also challenges the idea that emotions fit into fixed categories. He argues that emotions exist on a spectrum of valence (pleasant-unpleasant) and arousal (high-low) rather than being discrete, universal states.Dr. Jose-Miguel Fernández-Dols, who studied real-world emotional expressions (e.g., Olympic athletes on the podium), found no universal correlation between facial expressions and emotions.

Given these contradictions, should AI systems rely on assumptions that may be fundamentally flawed? Emotion recognition AI is built on potentially biased models that may fail to capture the full range of human emotional expression, leading to serious limitations:

Overgeneralization — Assuming a frown always means sadness, or a smile always means happiness, without considering nuance or intent.Cultural Bias — Trained mostly on Western facial datasets, making it less accurate for people from different cultural backgrounds.Context Ignorance — AI does not consider the situation, missing key social or environmental cues that shape emotional meaning.

In short, if humans themselves struggle to define emotions universally, how can AI be expected to get it right?

Bias, surveillance, and loss of privacy

Emotion recognition AI doesn’t just raise questions about scientific validity — it also comes with severe ethical and human rights implications. These systems rely on highly sensitive biometric data, yet their deployment often lacks transparency, oversight, and consent. This raises critical concerns about privacy, discrimination, and mass surveillance.

Privacy Risks

Emotion recognition requires the mass collection of biometric data, including facial expressions, voice patterns, and physiological signals. The problem? Many of these systems operate without informed consent. From AI-powered job interviews to retail surveillance, people are often analyzed without even knowing it, making it nearly impossible to opt out or challenge potentially biased assessments.

Discrimination Risks

Emotion recognition AI doesn’t perform equally across all demographics — and this inequality can have serious consequences.

Racial Bias:

Studies show that emotion recognition AI is less accurate for people of color, frequently misinterpreting neutral expressions as angry or untrustworthy.Neurodiversity Blind Spots:

AI models fail to account for neurodivergent individuals, such as autistic people, whose emotional expressions may differ from neurotypical patterns. As a result, these systems may wrongly flag autistic individuals as “deceptive,” “unengaged,” or even “suspicious”, reinforcing harmful stereotypes.

Mass surveillance & social control

Emotion recognition technology is increasingly used as a surveillance tool — often with authoritarian implications.

In China, schools have implemented emotion AI to monitor student attentiveness, tracking facial expressions to determine whether students are “engaged” in class. Following Paul Ekman’s theory, the devices tracked students’ behavior and read their facial expressions, grouping each face into one of seven emotions: anger, fear, disgust, surprise, happiness, sadness and what was labeled as neutral. This raises concerns about mental autonomy and forced emotional conformity.In Law Enforcement, some agencies have experimented with “predictive policing”, using emotion AI to detect “aggressive behavior” in public spaces. However, preemptively labeling individuals as threats based on AI-driven emotion analysis is not only scientifically unreliable but also deeply dystopian.AP Photo/Mark Schiefelbein, source

The UX dilemma: Designing for emotion AI

Emotion recognition AI presents a paradox for UX designers: on one hand, it promises more intuitive, emotionally aware interfaces; on the other, it risks misinterpreting emotions, reinforcing biases, and eroding user trust. This creates a fundamental dilemma:

Should UX designers embrace emotion AI to create “smarter” interactions?Or does its flawed science and ethical concerns make it too risky to use at all?

At its core, UX design is about understanding and improving human experiences — but how can we do that when AI itself misunderstands emotions?

Proponents of emotion AI argue that systems capable of recognizing and responding to emotions could enhance digital experiences — making virtual assistants more empathetic, customer service more responsive, and online learning more adaptive. A well-designed emotion-aware system could adjust its tone, recommendations, or interactions based on the user’s emotional state.

But here’s the problem — AI does not actually “understand” emotions; it simply detects patterns in expressions, tone, or biometrics and labels them. This raises a critical UX issue:

What happens when AI gets it wrong?How does a user challenge an incorrect emotional assessment?Should interfaces present AI-detected emotions as facts, probabilities, or just suggestions?

If an AI misreads frustration as aggression, or politeness as happiness, it could lead to misguided interactions, biased decisions, or even harm — especially in high-stakes areas like mental health, hiring, or education.

This puts UX designers at a crossroads. Should we build interfaces that rely on AI’s limited understanding of emotions, knowing the risks of bias and misinterpretation? Or should we push back, advocating for systems that respect emotional complexity rather than reducing it to data points?

Perhaps the real challenge is not how to perfect emotion recognition AI — but whether we should be designing for it at all.

The UX of emotion recognition: Can AI truly read feelings? was originally published in UX Collective on Medium, where people are continuing the conversation by highlighting and responding to this story.