AI is reshaping UI — have you noticed the biggest change yet?

AI is reshaping interactions as we know them, driving a new UI paradigm. Let’s break down how.

Goodbye commands, hello intent

The way we interact with software is anything but static. Sometimes it’s a gentle evolution, other times a jarring leap. Today, a growing wave of design pioneers, including Vitaly Friedman, Emily Campbell and Greg Nudelman are dissecting emerging patterns within AI applications, mapping out the landscape that refuses to stand still. At first glance, this might seem like yet another hype cycle, the kind of breathless enthusiasm that surrounds every new tech trend. But take a step back, and a deeper transformation becomes apparent: our interactions with digital systems are not just changing; they are shifting in their very essence.

Imagine the transition from film cameras to digital photography — suddenly, users no longer had to understand exposure times or carefully ration film. They simply clicked a button, and the device handled the rest.

AI is bringing a similar shift to UI design, moving us away from rigid, step-by-step processes and toward fluid, intuitive workflows. The very nature of the interaction is shifting, and as Jakob Nielsen recently stressed in his article, this evolution demands our full attention. He articulates a crucial insight:

“With the new AI systems, the user no longer tells the computer what to do. Rather, the user tells the computer what outcome they want”.

This isn’t just a technological evolution — it’s a philosophical one. It challenges long-held assumptions about control, agency, and human-machine collaboration. Where once we meticulously dictated every step, we now define intentions and let AI determine the best path forward. This transformation is as profound as the move from command-line interfaces to graphical user interfaces, and for UI designers, it represents both an opportunity and a challenge.

Tapping, swiping, asking: How interaction is evolving

But before we dive into how AI is reshaping interaction, it’s important to reflect on what has defined our most intuitive interfaces so far. In 1985, Edwin Hutchins, James Hollan, and Don Norman published a seminal paper on direct manipulation interfaces. Norman later defined some of the most widely accepted design principles in “The Design of Everyday Things”, while Hutchins pioneered the concept of Distributed Cognition. But in 1985, they, along with Hollan, captured a pivotal moment in design history when direct manipulation was emerging as a dominant strategy.

Direct manipulation is an interaction style in which users act on displayed objects of interest using physical, incremental, and reversible actions whose effects are immediately visible on the screen. NN/g

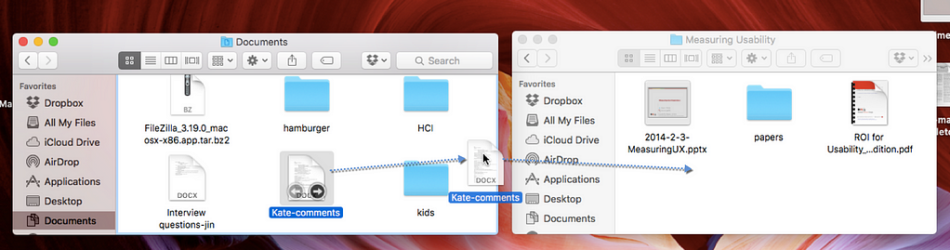

But what does this mean in simple terms? Say, you need to move a file from one folder to another — this is a classic example of direct manipulation — you see the file, grab it, and move it exactly where you want it to go.

You start by recognizing your goal (1). Then, you locate the file in its current folder and decide to drag it to the new location (2). You click and hold the file, move it across the screen, and drop it into the target folder (3).

If you accidentally drop it in the wrong place, you immediately see the result, adjust your approach, and drag it again until it lands where you intended. This kind of interaction feels intuitive because it minimizes cognitive effort — the system responds in real-time to your actions, reinforcing a sense of direct engagement and control.

The smoother this process, the more natural and satisfying the interaction feels.

Moving a file on MacOS using direct manipulation involves dragging that file from the source folder and moving it into the destination folder. Source

While reducing distance improves usability, what truly defines direct manipulation is engagement. The authors write:

“The systems that best exemplify direct manipulation all give the qualitative feeling that one is directly engaged with control of the objects — not with the programs, not with the computer, but with the semantic objects of our goals and intentions.”

Direct manipulation has remained a foundational design principle for decades. However, as we transition into AI-driven systems, we must consider how these principles evolve — and when they give way to goal-oriented interactions.

Now, think of Windows Photos’ AI-powered ‘Erase’ feature. Say you take a picture of your dog, but there’s an unwanted leash in the shot. Instead of manually selecting pixels and meticulously editing them out, as you would have done a decade ago, you simply select the leash and let the AI handle the rest. The system understands your goal — remove the leash — and executes the best possible solution.

Windows Photos, source

This interaction still involves some level of manipulation, as you must indicate the object to be erased, but the difference is that you are refining a request rather than directly altering pixels. You are no longer meticulously editing every detail; you are collaborating with the system to achieve a desired outcome. This shift marks a fundamental evolution in UI design.

Desolda, along with fellow researchers, captured this dynamic in a model based on Norman’s Gulf of Execution and Gulf of Evaluation. Unlike straightforward direct manipulation — such as dragging a file between folders, where actions unfold step by step — AI interactions demand a more fluid, iterative process. Users articulate their goals, but instead of executing every step manually, they collaborate with the system, refining inputs and guiding the AI as it interprets, adjusts, and responds dynamically.

The continued relevance of direct manipulation

AI may be reshaping the way we interact with technology, but direct manipulation isn’t going anywhere. Even in an era of intent-based interfaces, users will still need to engage with AI systems, guiding them with the right inputs to translate human goals into machine-readable instructions. Designing AI experiences isn’t about replacing direct manipulation — it’s about enhancing it, layering new interaction models on top of well-established patterns to make interactions smoother, more intuitive, and ultimately, more powerful.

To design seamless AI experiences, we need to recognize and build on familiar patterns.

For instance, in many AI applications, an open-ended prompt field acts as an icebreaker, helping users get the conversation started. Built upon the familiar input field pattern, which has been a standard UI component for decades, this method now serves a new role. Whether it’s typing a question into ChatGPT or instructing a design tool to generate a layout, this approach provides flexibility while guiding user intent in an intuitive and approachable way.

Open input pattern examples, Source

This approach isn’t limited to interaction patterns — it extends into UX frameworks as well.

For example, Evan Sunwall introduced ‘Promptframes’ as a way to complement traditional wireframes by integrating prompt writing and generative AI into the design process. The goal is to increase content fidelity and accelerate user testing by incorporating AI-powered content generation earlier in the workflow. Yet, this concept is built upon the foundation of wireframes, reinforcing the importance of understanding traditional UX structures to effectively design for AI-driven experiences.

Final thoughts

The best interface experiences are the ones users don’t notice. They don’t demand your attention or make you think about how to use them — they just work, letting users focus on what they came to do. AI, when done right, follows this same principle. It doesn’t need neon “powered by AI” labels; it should weave itself so seamlessly into the user journey that it feels like a natural extension of intent.

Take Netflix’s recommender system. It doesn’t interrupt your experience to remind you it’s using advanced algorithms. It doesn’t ask you to configure a dozen settings. Instead, it quietly learns, adapts, and presents suggestions that feel effortless — so much so that you rarely stop to think about the system behind it. That’s what AI-driven interaction should be: not a feature you have to wrestle with, but an invisible assistant that refines itself around your needs.

As we move toward intent-driven systems, this is the bar designers should aim for. AI should reduce friction, not add complexity. It should empower users, not overwhelm them with unnecessary choices. The best AI isn’t the one that demands attention — it’s the one that disappears into the flow of what you were trying to accomplish in the first place.

AI is reshaping UI — have you noticed the biggest change yet? was originally published in UX Collective on Medium, where people are continuing the conversation by highlighting and responding to this story.