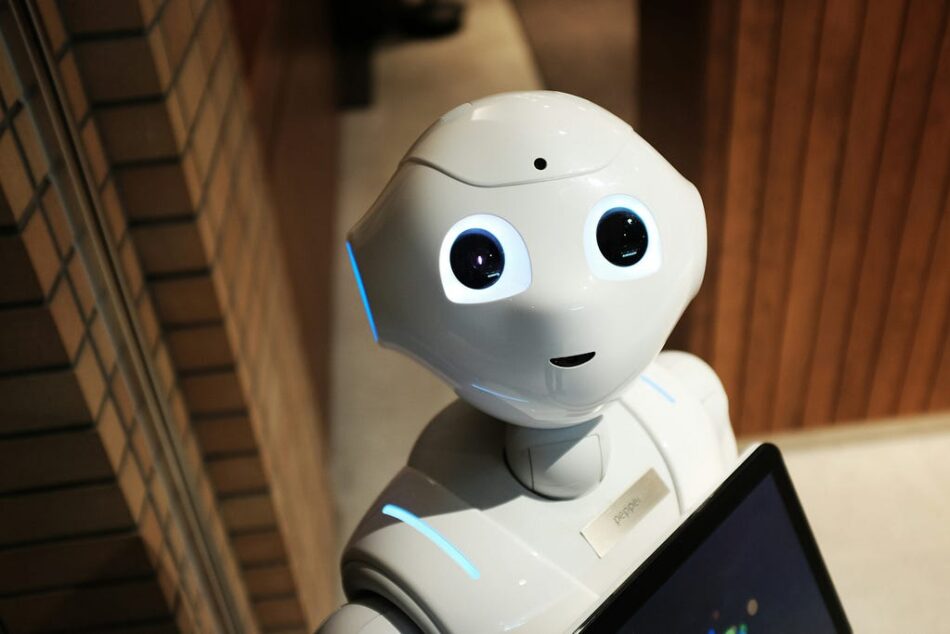

The future of our global society could look bleak if AI is left unregulated, but these five responsible technologists have ideas on how to change it.

Photo by Alex Knight on Unsplash

Warren Buffet’s business partner, Charlie Munger, once said: “Show me the incentive, and I will show you the outcome.”

Attention has become the main incentive in our current economy. The more attention you receive, the more money you make.

According to Munger, we shouldn’t be surprised that, as of 2019, over half (57%) of Gen Z want to be social media influencers. Forget being an Astronaut or Firefighter when you can have fame and make five figures a month by the age of 12. Heck, some kids are even making millions.

So what’s the harm with this? The dark side is that many of those same children are at risk of suffering from severe mental health issues at some point in their lives due to their social media use, and we have few, if any, regulations around children’s access to it.

Attention is a double-edged sword and a finite commodity. It reflects our priorities, and when we have it all sucked up by one thing, we have none left to give to more important demands like our health and well-being, real-life community, or the planet.

[The Rise of AI..] will either be the best thing that’s ever happened to us, or it will be the worst thing. If we’re not careful, it may very well be the last thing. — Stephen HawkingCanaries in the coal mine

The good news is some humane and responsible technologists out there see the writing on the wall and are trying (desperately) to do something about it.

There are many out there, but these five folks are having tough conversations with the government, big tech, and other technologists. They are bringing to light the issues that are starting to unfold with social media, generative AI, and LLMs and trying to change the script of how we design moving forward more ethically for a brighter future.

Tristan Harris — Design Ethicist and Co-Founder of Center for Humane Technology

Photo by Stephen McCarthy/Collision via Sportsfile

A former Google design ethicist, Harris has spoken out about the negative impacts of persuasive design in tech products, particularly on mental health and attention spans. He also speaks to the fact that AI technology is moving way faster than the human brain can adapt to it.

Our brains are not matched with the ways that technology is influencing our minds. — Tristan Harris

In a speech at the Nobel Prize Summit in 2023, Harris discussed humans’ first contact with AI, the algorithm determining video content as users scroll on social media.

“How did that turn out?” He asks the audience. They respond by laughing.

We all know how it turned out because we’re still battling its effects, the ones he calls out in the screenshot below:

Information overloadAddictionDoomscrollingInfluencer cultureSexualization of kidsCancel cultureShortened attention spansPolarizationBots, deep fakesCult factoriesFake newsBreakdown of DemocracyScreenshot of YouTube video from The Nobel Prize

The idea of incorporating AI into social media has, in some way, contributed to the breakdown of democracy.

Let that sink in.

Are we, as designers, assuming this level of accountability for what we build? We need to start.

According to Harris, we are about to move into the second contact of AI now with LLMs. He warns that we haven’t yet fixed the rampant issues on social media. Much of this was developed with a similar goal to Zuckerman’s–more personalized ads.

In my job, we frequently discuss personalizing content. It’s the future, and companies everywhere feel immense pressure to compete in the growing attention economy. But personalizing content is a double-edged sword. Like any tool, it depends on how it’s wielded. It can deliver helpful information to people but also locks them into their echo chambers of belief systems, detaching them from a shared reality.

Harris now works as Co-Founder of the Center for Humane Technology (CHT), a nonprofit with a mission to align technology with humanity’s best interests. He wants us to stop thinking so narrowly and consider how we can leverage human wisdom to match the growing complexity of our world.

He proposes that instead of designing an app that makes people scroll through virtual communities and influencers, it can help support humans’ real needs to grow, for example, their local community.

How does technology embrace these aspects of what it means to be human and design in a richer way? — Tristan Harris

Timnit Gebru — AI Ethics Researcher

Photo by Victor Grigas

At one point, Gebru, the co-lead of the Ethical Artificial Intelligence Team at Google, was fired after voicing concerns about racial bias in the workplace and AI. Before she left, she had built one of the most diverse AI departments.

In 2019, Gebru and other researchers “signed a letter calling on Amazon to stop selling its facial-recognition technology to law enforcement agencies because it is biased against women and people of color.” They referenced a study conducted by MIT showing that Amazon’s facial recognition system (over other companies) had difficulty identifying darker-skinned females.

She emphasized the risks of large language models (LLMs), which are key to Google’s business (Technology Review), and AI biases with facial scanning technology. Take, for example, their 2015 AI photos labeling black people ‘gorillas’ (USA Today).

If that doesn’t sound bad enough, look at this global map of countries already using facial scanning technology in public.

View of Global Government Use of Facial Technologies from comparitechWe ask whether enough thought has been put into the potential risks associated with developing them [AI LLMs] and strategies to mitigate these risks. — Timnit Gebru

She also highlights the following concerns about AI:

Massive Environmental and financial costs further contribute to the wealth gap.There is a risk that racist, sexist, and other inappropriate language could end up training the model.There is a divide between those with data to train the model and those without data, which further adds to bias and discrimination.Researchers worry that big tech prioritizes building LLMs that can manipulate language without fully understanding it. This is profitable but limited and could potentially hinder more impactful advancements.LLMs are so good at imitating real human language that it’s easy to fool people. They are not always accurate and can lead to dangerous misunderstandings.

Gebru has since founded the Black in AI and Distributed Artificial Intelligence Research Institute (DAIR), which helps to increase the black AI research community and build more diversity in AI.

Jaron Lanier–Interdisciplinary Scientist and leader in virtual reality

Photo by JD Lasica — CC BY 2.0

As the “Godfather of Virtual Reality” technology, Lanier warns about technology’s downsides, such as social isolation and potential manipulation through experiences like VR.

To me the danger is that we’ll use our technology to become mutually unintelligible or to become insane if you like, in a way that we aren’t acting with enough understanding and self-interest to survive, and we die through insanity, essentially.” — The Guardian

Lanier’s point seems to track Tristan Harris’s global forecast of growing, unmanageable complexity. That does sound enough to make people go insane, especially if they are also isolated.

Bruce Schneier–Public-Interest Technologist

Photograph by Rama, Wikimedia Commons, Cc-by-sa-2.0-fr

A renowned security expert and author, Schneier has criticized tech companies’ mass collection of personal data and its potential misuse.

We need trustworthy AI. AI whose behavior, limitations, and training are understood. AI whose biases are understood, and corrected for. AI whose goals are understood. That won’t secretly betray your trust to someone else.The market will not provide this on its own. Corporations are profit maximizers, at the expense of society. And the incentives of surveillance capitalism are just too much to resist. — Bruce Schneier

As cyber security threats increase and AI technology advances, our institutions are lagging in protecting consumers and users from succumbing to sophisticated phishing, data breaches, and deep fakes.

Alka Roy–Responsible Innovation Project

Screenshot from YouTube https://youtu.be/kLMlFDhlc2g

Alka is a keynote speaker, responsible innovation leader, and the founder of the Responsible Innovation Project. She’s a wealth of information and has a knack for articulating complex concepts clearly.

She believes that businesses can (and must) do better. She is a staunch advocator for bringing ethics into business AI strategies and product development.

It is not just about ensuring a prosperous and just society; if ethics are not included in business AI strategies and product development, businesses can experience rejection by consumers and declining public opinion about AI. — Alka Roy

In 2020, Responsible Innovation Labs put out the Responsible AI research survey with help from the AI community for The Responsible Innovation (RI) Labs and The Responsible Innovation Project. The survey found that the top five concerns with AI were:

TransparencyAccountabilityPrivacyData IntegritySurveillanceIf we keep moving towards over-reliance on technology leveraged by the concentrated power and wealth of a few without a lack of responsibility and accountability to the well-being of people and the environment, what kind of future can we expect? — alka roy

Become a Humane/Responsible Technologist

If this article has convinced you to join the mission of building more responsible technology, I recommend the following trainings.

They are all free, and I recently completed them myself. I have no connection to them and do not receive any incentives for recommending them, but they are worth your time.

Foundations in Humane Technology — Center for Humane TechnologyEthics in AI and Data Science — The Linux Foundation (Instructor: Alka Roy)Practical Data Ethics — ethics.fast.aiStart Your Responsible Tech Journey–All Tech is Human

Beth J is a Global UX Design Lead, solopreneur, and burnout coach. She writes about UX design, human behavior, burnout, solopreneurship, and challenging the status quo.

Follow her on Medium for more articles like this, or subscribe to her weekly anti-burnout newsletter for how-tos and rebellious tidbits.

AI ethicists are speaking out, but are we listening? was originally published in UX Collective on Medium, where people are continuing the conversation by highlighting and responding to this story.