Designing the future.

AI micro UI components

AI is set to revolutionize the global economy, potentially contributing $15.7 trillion to the global economy by 2030 — more than the combined output of China and India. Among the sectors most likely to be profoundly transformed by AI is healthcare. However, during my time at NHS Digital (the digital provider of the UK’s National Health Service), I observed countless times how systems weren’t designed to fit into existing clinical workflows and created additional work for clinicians, led to manual workarounds and often caused errors as a result of manual data entry across systems that did not talk to each other. The concern is that AI systems designed without UX at the center will lead to even further disruption.

From diagnostic support to consumer health tracking tools, the role of UX in AI-driven healthcare is crucial in ensuring that these solutions are not only practical and effective but also user-friendly. This article explores the intersection of UX and AI in the healthcare sector, examining the role of UX in AI-enabled healthcare tools and current trends, offering guidance on designing better AI-driven experiences. We begin by delving into the role UX plays in AI healthcare applications.

The Role of UX in AI

AI is shifting the command-based human-computer interaction paradigm that has dominated for the past 60 years. The command-based paradigm unfolds like this: the user does something, and the computer responds. Then, the user sees the result on-screen and decides what the following action should be. The user taps, clicks, and enters some text, possibly hundreds of times, until the desired result is achieved. The computer has no idea what the end result or the next step is—that is always decided by the human.

With AI, however, the above dynamic shifts dramatically. Users specify their desired goal, and the AI system determines how to accomplish it. For instance, rather than manually creating a vector illustration by selecting tools and making countless adjustments, users can now instruct an AI like DALL-E to “create a vector graphic to illustrate AI-driven healthcare, using simple shapes and bold colors.” A few seconds later, users have an illustration they can use. While this approach saves time, it also introduces new challenges. AI-generated results may only sometimes align with user intentions, and the opacity of AI processes can make iterative refinement difficult.

Opening up the black-box

Consider a radiologist reviewing a lung x-ray flagged by AI as normal when, in fact, the scan presents concerning lesions. In this Danish study, researchers found that 4 leading commercially available AIs performed worse than radiologists in detecting lung disease when more than one health problem was present. Suppose the radiologist deems a scan concerning, but the AI repeatedly misses similar scans. In that case, the lack of transparency in the AI’s decision-making process becomes a hindrance and rightly puts the AI’s accuracy into question. Furthermore, radiologists often cannot provide meaningful feedback to improve the AI, as they don’t understand why AI made its judgment in the first place. It is, therefore, up to the designers of AI systems to address the opaqueness of AIs and make them more transparent and interpretable to users.

An issue that is further compounded by the black-box nature of AI is that of bias. Bias in healthcare AI has been well documented. From AIs trained on male cardiovascular symptoms that fail to detect heart disease in women to AIs that struggle to identify melanoma in people with darker skin. The reasons for bias can, and often are, multiple. Bias can occur because there is not enough data in the training dataset on specific groups of people for the AI to learn and identify patterns correctly. In other cases, there may not be any data or recent data for a specific population. Another type of bias occurs when the algorithm does not perform well with data that isn’t training data, as in the example of detecting melanoma on darker skin tones. Finally, bias can also occur when the AI does not perform well outside of lab conditions, such as the Google Health example in Thailand, where an AI failed to identify diabetic retinopathy on images taken under varying light conditions. Once again, UX has a role in helping mitigate the effects of the biases inherent to these systems. For example, AI outputs should explain which data went into the decision, whether any relevant data was missing, and what level of confidence the AI has in a particular prediction. A recent movement around eXplainable AI (XAI) has emerged, calling for precisely that — the design of AI systems that are more understandable and transparent to humans.

A further study looked at whether AI influenced radiologists’ decision-making. They found that radiologists were more likely to make mistakes when the AI diagnosis was wrong. Human overreliance on AIs can have negative consequences, especially with less experienced professionals. The researchers also found that the negative effect of AI could be mitigated with better UX design. Radiologists were less likely to over-rely on the AI if:

the AI’s diagnosis would not be recorded on the patient’s electronic health record;the AI drew a box on the X-ray outlining where it thought the lesions were.

In other words, radiologists were more comfortable disagreeing with the AI if there was no record of them doing so. They were also more likely to challenge the AI’s diagnosis if it was clear what the AI was basing that diagnosis on. Given how fallible AIs can be in diagnostics, it’s paramount that designers understand the contexts within which they’re being used, who is using them, and how their design can be optimized to avoid negative impacts on patients and clinicians. Ensuring that AI systems are genuinely beneficial in real-world healthcare settings rather than just being technological novelties is a key challenge for UX designers. Healthcare professionals, especially those in high-stress environments, need AI systems that are not only accurate but easy to use and trustworthy.

Finally, before diving into a set of UX principles to bear in mind when designing AI-enabled healthcare applications, it is worth saying that AI should be a tool clinicians use to support their work rather than a replacement for humans.

Key UX Principles for AI in Healthcare

UX Design Principles for AI-enabled healthcare applications

Transparency

Clinicians must understand when AI is intervening and what it’s doing while maintaining control over the AI. For example, in high-stakes settings like healthcare, it’s essential that clinicians are able to identify whether AI or a fellow human generated a diagnosis or treatment plan. If AI-generated, clinicians need the ability to drill down into the data and reasoning behind those decisions and understand the system’s level of confidence in the results.

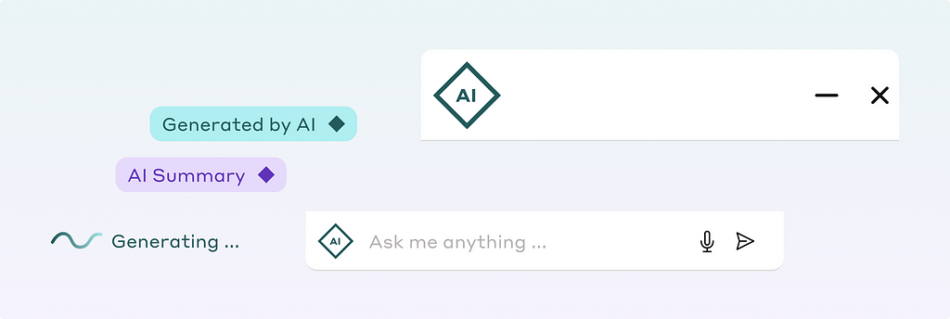

Productboard labels whenever AI is used to generate content.

Interpretability

Machines that can explain how they reached a particular outcome are more likely to gain human acceptance and be less biased. Much work has focused on developing the accuracy and breadth of AI applications. However, from a UX point-of-view, we must now focus on how the system explains its results to users. It’s crucial to make AI systems’ decision-making processes transparent. For instance, a clinician should be able to understand why a specific treatment plan is suggested, how patient history and symptoms were considered, and whether, for example, the latest research was factored into the recommendation.

Segment allows users to drill down into the reasoning and data behind its AI predictions.

Controllability

Clinicians should retain complete control over AI systems, including the ability to undo, dismiss, or modify AI-generated decisions. Effective UX design ensures that users can easily manage AI outputs and gives them confidence that the AI is not acting on clinicians’ behalf without approval or oversight, maintaining trust in the technology.

In ARC, the user takes the initiative to ask the AI to summarize a page. In this way, there’s no confusion over whether the summary is human- or AI-generated.

Adaptability

The AI should learn from clinician feedback, whether that’s input on how to read medical imagery more accurately or specific preferences on treatment plans. The AI needs to be able to receive feedback and adapt its output accordingly. As designers, we must ensure user feedback loops are built into AI systems. On the other hand, AIs must also be stable and accurate enough to assist clinicians without requiring constant feedback. If clinicians find themselves frequently correcting AI outputs, they may lose trust in these systems and revert to manual methods instead.

Marlee helps users improve their work performance and team dynamics by evaluating their responses to a comprehensive questionnaire. Users can provide additional information for the AI-calculated metrics through the “Tell me more” feature.

Trustworthiness

Does the AI convey trustworthiness? Not only in the accuracy of its outputs but also in how information is presented. Humans tend to attribute human qualities to AI systems, so does the system’s output come across as professional, accurate, organized, and transparent? A confusing UI that obfuscates AI decisions can erode confidence. UX design should ensure that AI systems communicate clearly, professionally, and transparently.

Limbic uses AI to calculate a series of assessment scores while also presenting what the patient said in their own words and a clear link to see the patient’s entire input.

Expectation Setting

Users often overestimate or underestimate AI capabilities, mainly due to a lack of understanding of how these systems work. As designers, it’s essential to set clear expectations about what the AI can and cannot do. In clinical settings, AI should not mimic human behavior but rather be transparent about its non-human nature and limitations. Acknowledging a limitation could, for example, be explicitly informing the clinician that the AI was trained on a dataset that does not demographically match the patient at hand.

Marlee makes it clear that the user is talking to an AI that has a specific function.

Hybrid Modes of Interaction

The future will likely include a blend of intent-based interfaces, where the user states their goal or desired end result, such as “summarise patient notes in 1 sentence for handover”, with more traditional GUI interfaces for interacting with the AI, tweaking results, and providing feedback. We can already see examples of a blend emerging in applications such as Heidi Health. In Heidi Health, the patient consultation is transcribed and summarised on behalf of clinicians. The clinicians can then use the GUI to change the template format of the transcription, edit the text with a keyboard and mouse, or give further voice commands to the AI. The GUI also displays common actions that are available, which the user might not know to ask for if they weren’t displayed on-screen — AIs have command discoverability issues, are limited by what the user imagines the AI can do, or what the user can articulate in writing as a request for the AI.

Screenshot of Heidi Health’s AI-enabled transcription application.

Human-centered AI

AI should address real-world problems, following user needs rather than technological possibilities. Not every task requires AI intervention, and designers must critically assess whether AI enhances or hinders the current workflows and tools clinicians and patients use. Google Health’s experience in Thailand highlights this: an AI developed to detect diabetic retinopathy struggled in real-world clinics due to variations in equipment and image quality. This case study underscores the need to test AI systems in their intended environments before widespread deployment.

Applications of AI in Healthcare

The list of AI applications in healthcare below is by no means exhaustive, but it highlights key areas where AI is already making a significant impact. Each application is paired with essential UX considerations to ensure that these technologies are user-centered.

Clinician-Facing Applications

Automated Transcription. AI can significantly reduce clinicians’ time spent on administrative tasks by automatically transcribing patient consultations and updating medical records. However, clinicians must be able to review and verify these transcriptions for accuracy. Clinicians must also be warned if the AI has struggled to capture some of the conversation due to factors such as audio conditions, accents, etc. Effective UX design can facilitate this by organizing information in a way that is easy to scan, summarizing key points, flagging next actions such as referrals, and alerting to missing data in ways that are discoverable and usable.Clinical Decision Support. AI can enhance clinical decision support systems by analyzing vast amounts of medical data, such as data from electronic health records (EHRs), uncovering insights, and recommending treatment options. UX plays a critical role in how this information is presented to clinicians. AI systems must clearly communicate the data and reasoning behind their recommendations, enabling clinicians to understand the AI recommendation and choose whether to use the insights provided.Imaging & Diagnostics. AI is being used to improve image quality and automatize image analysis, supporting clinicians in diagnosis and even spotting patterns earlier in the progression of a disease than a human might be able to. Despite promising results, AI image analysis still struggles with real-world data. UX considerations include how these systems communicate their findings to clarify why the AI reached a specific diagnosis and how it allows clinicians to query the output and provide feedback.Electronic Health Records (EHR) Optimization. AI can structure and summarize unstructured data from health records, making it easier for clinicians to review. UX design must ensure that this data is summarized and organized logically, with clear pathways for clinicians to access detailed information as needed, correct errors, or add additional information. UX designers should also ensure AIs cannot modify patient EHRs without clinician oversight and approval.

Real-world deployments of AI in healthcare have demonstrated that, while useful as a tool supporting clinicians’ work, there is still room for improving their usability and UX. AI systems are constrained by the quality of the data they are trained on, can propagate biases, and are opaque to their users. The UX principles of Transparency, Interpretability, Controllability, Adaptability, Trustworthiness, Expectation Setting, Huma-Centered AI, and Hybrid Modes of Interaction outlined above can help guide designers in creating AI-enabled healthcare applications that are more humane, fair, and useable.

The role of UX in AI-driven healthcare was originally published in UX Collective on Medium, where people are continuing the conversation by highlighting and responding to this story.